Prompts overview and use cases

Use Prompts to evaluate and refine your LLM prompts and then generate predictions to automate your labeling process.

All you need to get started is an LLM deployment API key and a project.

With Prompts, you can:

- Drastically improve the speed and efficiency of annotations, transforming subject matter experts (SMEs) into highly productive data scientists while reducing the dependency on non-SME manual annotators.

- Increase annotation throughput, accuracy, and consistency, making the process faster and more scalable.

- Empower users to harness the full potential of AI-driven text labeling, setting a new standard for efficiency and innovation in data labeling.

- Leverage subject matter expertise to rapidly bootstrap projects with labels, allowing you to decrease time to ML development.

- Allow your subject matter experts time focus on higher-level tasks rather than being bogged down by repetitive manual work.

Features, requirements, and constraints

| Feature | Support |

|---|---|

| Supported data types | Text Image Note: Images are only supported when uploaded through cloud storage. |

| Supported object tags | Text HyperText Image |

| Supported control tags | Choices (Text and Image)Labels (Text)TextArea (Text and Image)Pairwise (Text and Image)Number (Text and Image)Rating (Text and Image) |

| Class selection | Multi-selection (the LLM can apply multiple labels per task) |

| Supported base models | OpenAI gpt-3.5-turbo-16k* OpenAI gpt-3.5-turbo* OpenAI gpt-4 OpenAI gpt-4-turbo OpenAI gpt-4o OpenAI gpt-4o-mini Azure OpenAI chat-based models Custom LLM Note: We recommend against using GPT 3.5 models, as these can sometimes be prone to rate limit errors and are not compatible with Image data. |

| Text compatibility | Task text must be utf-8 compatible |

| Task size | Total size of each task can be no more than 1MB (approximately 200-500 pages of text) |

| Network access | If you are using a firewall or restricting network access to your OpenAI models, you will need to allow the following IPs: 3.219.3.197 34.237.73.3 4.216.17.242 |

| Required permissions | Owners, Administrators, Managers – Can create Prompt models and update projects with auto-annotations. Managers can only apply models to projects in which they are already a member. Reviewers and Annotators – No access to the Prompts tool, but can see the predictions generated by the prompts from within the project (depending on your project settings). |

| ML backend support | Prompts should not be used with a project that is connected to an ML backend, as this can affect how certain evaluation metrics are calculated. |

| Enterprise vs. Open Source | Label Studio Enterprise (Cloud only) Starter Cloud |

Use cases

Auto-labeling with Prompts

Prompts allows you to leverage LLMs to swiftly generate accurate predictions, enabling instant labeling of thousands of tasks.

By utilizing AI to handle the bulk of the annotation work, you can significantly enhance the efficiency and speed of your data labeling workflows. This is particularly valuable when dealing with large datasets that require consistent and accurate labeling. Automating this process reduces the reliance on manual annotators, which not only cuts down on labor costs but also minimizes human errors and biases. With AI’s ability to learn from the provided ground truth annotations, you can maintain a high level of accuracy and consistency across the dataset, ensuring high-quality labeled data for training machine learning models.

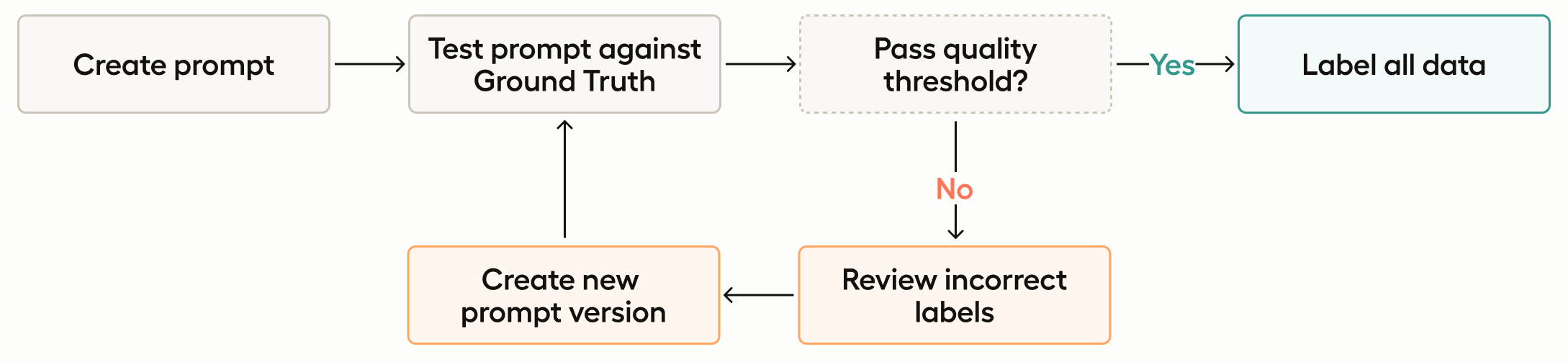

Workflow

If you don’t already have one, create a project and import a text-based dataset.

Annotate a subset of tasks, marking as many as possible as ground truth annotations. The more data you have for the prompt evaluation, the more confident you can be with the results.

If you want to skip this step, see the bootstrapping use case outlined below.

Go to the Prompts page and create a new Prompt. If you haven’t already, you will also need to add an API key to connect to your model.

Write a prompt and evaluate it against your ground truth dataset.

When your prompt is returning an overall accuracy that is acceptable, you can choose to apply it to the rest of the tasks in your project.

Bootstrapping projects with Prompts

In this use case, you do not need a ground truth annotation set. You can use Prompts to generate predictions for tasks without returning accuracy scores for the predictions it generates.

This use case is ideal for organizations looking to kickstart new initiatives without the initial burden of creating extensive ground truth annotations, allowing you to start analyzing and utilizing your data immediately. This is particularly beneficial for projects with tight timelines or limited resources.

By generating predictions and converting them into annotations, you can also quickly build a labeled dataset, which can then be refined and improved over time with the help of subject matter experts. This approach accelerates the project initiation phase, enabling faster experimentation and iteration.

Additionally, this workflow provides a scalable solution for continuously expanding datasets, ensuring that new data can be integrated and labeled efficiently as the project evolves.

note

You can still follow this use case even if you already have ground truth annotations. You will have the option to select a task sample set without taking ground truth data into consideration.

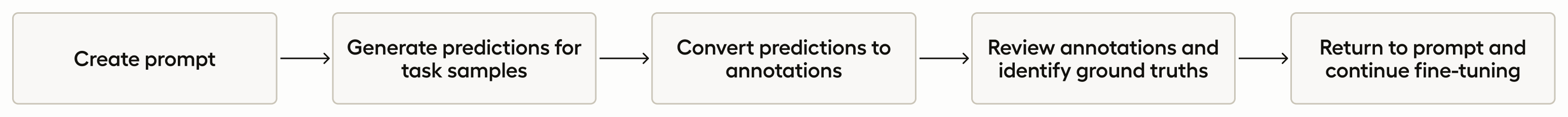

Workflow

If you don’t already have one, create a project and import a text-based dataset.

Go to the Prompts page and create a new Prompt. If you haven’t already, you will also need to add an API key to connect to your model.

Write a prompt and run it against your task samples.

When you run your prompt, you create predictions for the selected sample (this can be a portion of the project tasks or all tasks). From here you have several options:

- Continue to work on your prompt and generate new predictions each time you run it against your sample.

- Return to the project and begin reviewing your predictions. If you convert your predictions into annotations, you can use subject matter experts and annotators to begin interacting with those the annotations.

- As you review the annotations, you can identify ground truths. With a ground truth dataset, you can further refine your prompt using its accuracy score.

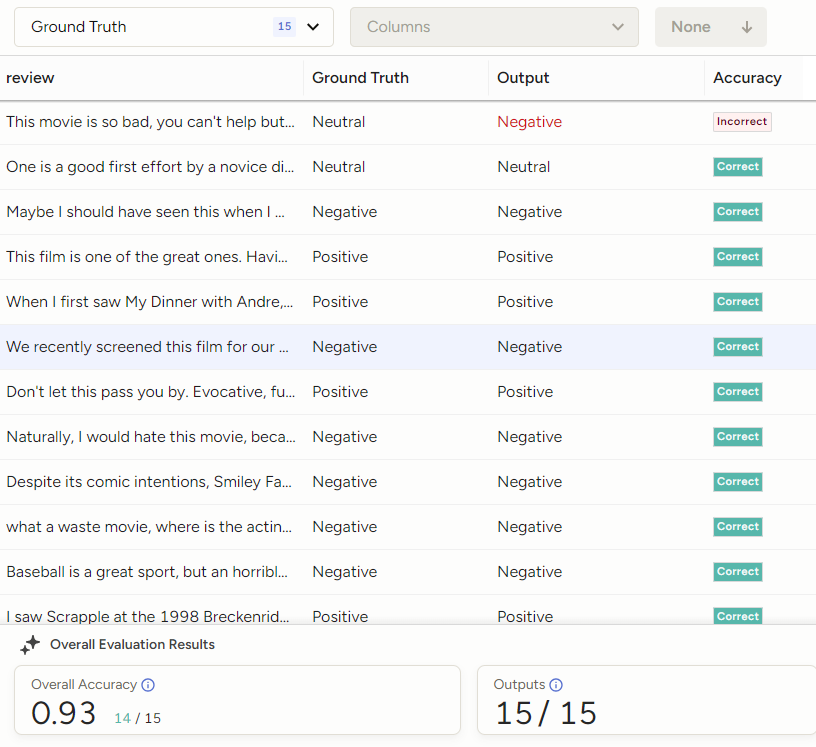

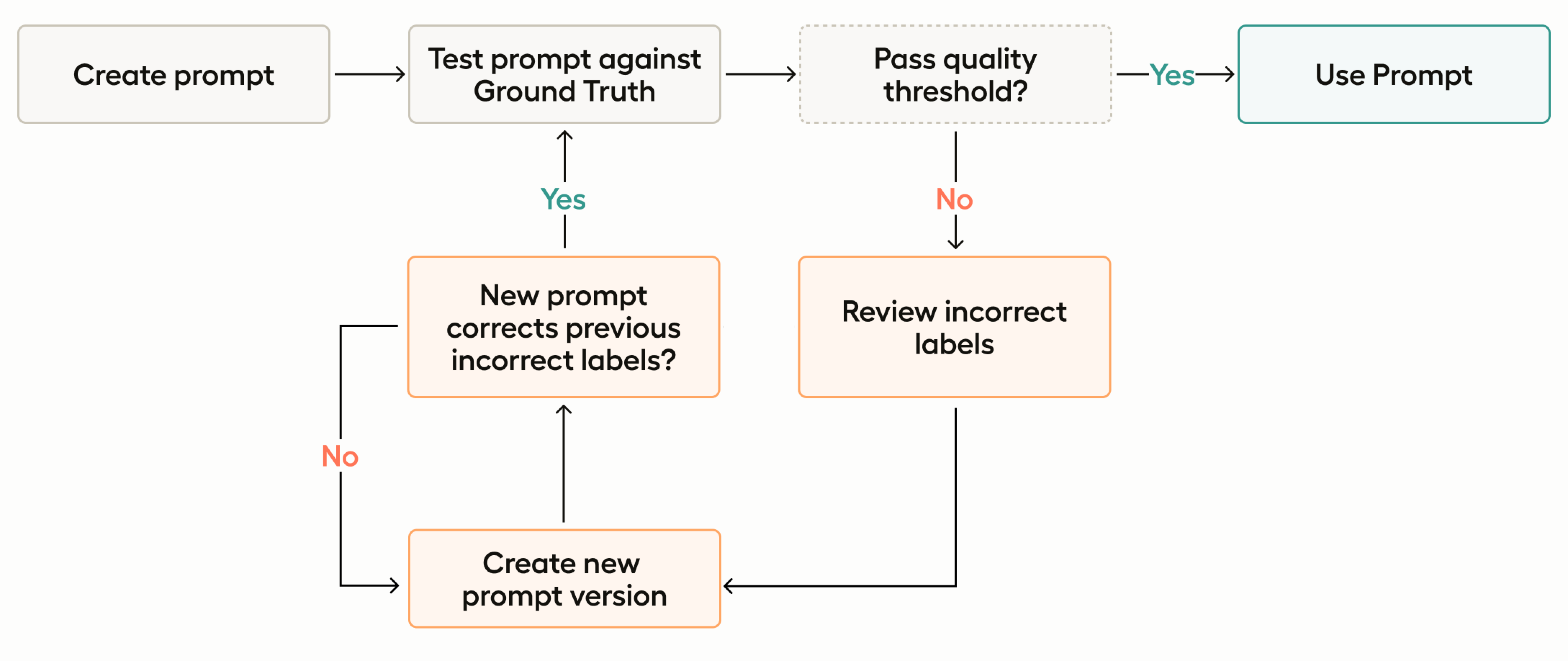

Prompt evaluation and fine-tuning

As you evaluate your prompt against the ground truth annotations, you will be given an accuracy score for each version of your prompt. You can use this to iterate your prompt versions for clarity, specificity, and context.

This accuracy score provides a measurable way to evaluate and refine the performance of your prompt. By tracking accuracy, you can ensure that the automated labels generated by the LLM are consistent with ground truth data.

This feedback loop allows you to iteratively fine-tune your prompts, optimizing the accuracy of predictions and enhancing the overall reliability of your data annotation processes. In industries where data accuracy directly impacts decision-making and operational efficiency, this capability is invaluable.

Workflow

If you don’t already have one, create a project and import a text-based dataset.

Annotate a subset of tasks, marking as many as possible as ground truth annotations. The more data you have for the prompt evaluation, the more confident you can be with the results.

Go to the Prompts page and create a new Prompt. If you haven’t already, you will also need to add an API key to connect to your model.

Write a prompt and evaluate it against your ground truth dataset.

Continue iterating and refining your prompt until you reach an acceptable accuracy score.

Example project types

Text classification

Text classification is the process of assigning predefined categories or labels to segments of text based on their content. This involves analyzing the text and determining which category or label best describes its subject, sentiment, or purpose. The goal is to organize and categorize textual data in a way that makes it easier to analyze, search, and utilize.

Text classification labeling tasks are fundamental in many applications, enabling efficient data organization, improving searchability, and providing valuable insights through data analysis. Some examples include:

- Spam Detection: Classifying emails as “spam” or “ham” (not spam).

- Sentiment Analysis: Categorizing user reviews as “positive,” “negative,” or “neutral.”

- Topic Categorization: Assigning articles to categories like “politics,” “sports,” “technology,” etc.

- Support Ticket Classification: Labeling customer support tickets based on the issue type, such as “billing,” “technical support,” or “account management.”

- Content Moderation: Identifying and labeling inappropriate content on social media platforms, such as “offensive language,” “hate speech,” or “harassment.”

Named entity recognition (NER)

A Named Entity Recognition (NER) labeling task involves identifying and classifying named entities within text. For example, people, organizations, locations, dates, and other proper nouns. The goal is to label these entities with predefined categories that make the text easier to analyze and understand. NER is commonly used in tasks like information extraction, text summarization, and content classification.

For example, in the sentence “Heidi Opossum goes grocery shopping at Aldi in Miami” the NER task would involve identifying “Aldi” as a place or organization, “Heidi Opossum” as a person (even though, to be precise, she is an iconic opossum), and “Miami” as a location. Once labeled, this structured data can be used for various purposes such as improving search functionality, organizing information, or training machine learning models for more complex natural language processing tasks.

NER labeling is crucial for industries such as finance, healthcare, and legal services, where accurate entity identification helps in extracting key information from large amounts of text, improving decision-making, and automating workflows.

Some examples include:

- News and Media Monitoring: Media organizations use NER to automatically tag and categorize entities such as people, organizations, and locations in news articles. This helps in organizing news content, enabling efficient search and retrieval, and generating summaries or reports.

- Intelligence and Risk Analysis: By extracting entities such as personal names, organizations, IP addresses, and financial transactions from suspicious activity reports or communications, organizations can better assess risks and detect fraud or criminal activity.

- Specialized Document Review: Once trained, NER can help extract industry-specific key entities for better document review, searching, and classification.

- Customer Feedback and Product Review: Extract named entities like product names, companies, or services from customer feedback or reviews. This allows businesses to categorize and analyze feedback based on specific products, people, or regions, helping them make data-driven improvements.

Text summarization

Text summarization involves condensing large amounts of information into concise, meaningful summaries.

Models can be trained or fine-tuned to recognize essential information within a document and generate summaries that retain the core ideas while omitting less critical details. This capability is especially valuable in today’s information-heavy landscape, where professionals across various fields are often overwhelmed by the sheer volume of text data.

Some examples include:

- Customer Support and Feedback Analysis: Companies receive vast volumes of customer support tickets, reviews, and feedback that are often repetitive or lengthy. Auto-labeling can help summarize these inputs, focusing on core issues or themes, such as “billing issues” or “technical support.”

- News Aggregation and Media Monitoring: News organizations and media monitoring platforms need to process and distribute news stories efficiently. Auto-labeling can summarize articles while tagging them with labels like “politics,” “economy,” or “health,” making it easier for users to find relevant stories.

- Document Summarization: Professionals often need to quickly understand the key points in lengthy contracts, research papers, and files.

- Educational Content Summarization: EEducators and e-learning platforms need to distill complex material into accessible summaries for students. Auto-labeling can summarize key topics and categorize them under labels like “concept,” “example,” or “important fact.”

Image captioning and classification

Image captioning involves applying descriptive text for images. This has valuable applications across industries, particularly where visual content needs to be systematically organized, analyzed, or made accessible.

You can also use Prompts to automatically categorizing images into predefined classes or categories, ensuring consistent labeling of large image datasets.

Some examples include:

E-commerce Product Cataloging: Online retailers often deal with thousands of product images that require captions describing their appearance, features, or categories.

Digital Asset Management (DAM): Companies managing large libraries of images, such as marketing teams, media organizations, or creative agencies, can use auto-labeling to caption, tag, and classify their assets.

Content Moderation and Analysis: Platforms that host user-generated content can employ image captioning to analyze and describe uploaded visuals. This helps detect inappropriate content, categorize posts (e.g., “Outdoor landscape with a sunset”), and surface relevant content to users. You may also want to train a model to classify image uploads into categories such as “safe,” “explicit,” or “spam.”

Accessibility for Visually Impaired Users: Image captioning is essential for making digital content more accessible to visually impaired users by providing descriptive alt-text for images on websites, apps, or documents. For instance, an image of a cat playing with yarn might generate the caption, “A fluffy orange cat playing with a ball of blue yarn.”